Today’s high-performance computing systems are heterogeneous and usually consist of several general-purpose CPUs (various ISA-s depending on the target domain: ARM, x86, RISC-V) interconnected with the domain-specific accelerators in various forms: GPUs, FPGAs, integrated-chip accelerators in ASIC (Application Specific Integrated Circuit).

Another important trend in architecture research and exploration is the convergence of computer architectures historically tied to different domains (desktop, mobile, HPC, embedded). HPC, cloud computing, Embedded, and IoT are under an extreme energy efficiency challenge, the demand for exponentially increasing computing capability, within a constant or decreasing power budget. While in the past technology scaling (Moore’s Law) would provide answers to this challenge, this is not the case any longer. Technology scaling is slowing down because of physical and economical limits with multifaceted negative manifestations (extreme consolidation of the semiconductor industry, exponentially increasing cost of design in advanced technologies) and related performance issues (diminishing returns).

Hence, the whole computing field (industry and research) is turning to architecture and system design for faster and more affordable innovation. In that light, heterogeneity, and specialization (codesign of application software and hardware) of computing systems have emerged as a promising solution to the performance/energy challenge. In the HPC domain, heterogeneity enables application specialization of computing resources which offers more performance with reduced power consumption and thus more efficient power management and cooling of large-scale HPC systems. In the embedded domain, heterogeneity enables more performance within the constrained battery-provided energy budget.

In the HPC domain, the trend toward heterogeneity and specialization is visible even in production systems today. The TOP500 and GREEN500 leading system architectures increasingly integrate hardware accelerators in various forms (GPUs). Cloud computing facilities integrate FPGAs (e.g., Amazon EC2 F1, Microsoft Catapult) and custom ASICs (e.g., Google’s Tensorflow Research Cloud, Bitcoin Mining Antminer) to achieve the most energy-efficient computing at scale. Intel’s acquiring of Altera, one of two major FPGA suppliers, clearly indicates that future computing platforms will add more heterogeneity in the form of reconfigurable fabric.

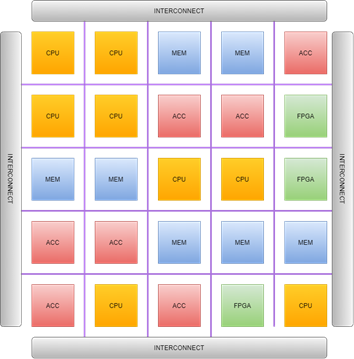

Architecture research provides insights into the details of the computing systems and their performance (computing capability, power, area) in executing applications of interest. Heterogeneity as the driving factor to reaching exascale computing mandates the coverage and exploration in the multidimensional research space consisting of interplay and mix of general-purpose CPUs, domain-specific accelerators, interconnects, memories, and technologies as illustrated in the figure.

.png)

The exascale computer needs to be carefully designed from basic building blocks which will cover both general-purpose computing and application/domain-specific computing with accelerators. Data availability close to computing engines is also crucial and requires the high-bandwidth interconnect to on/off-chip memories, as shown in the figure below. Architects together with the application-domain specialists need the tools that will enable the flexible and scalable design of the computing elements consisting of various basic building blocks (CPUs, memories, accelerators, programmable logic, networks-on-chip, and off-chip interconnects) in the co-design process.